Python Web Scraping: Verify SSL certificates for HTTPS requests using requests module

Write a Python program to verify SSL certificates for HTTPS requests using requests module.

Note: Requests verifies SSL certificates for HTTPS requests, just like a web browser. By default, SSL verification is enabled, and Requests will throw a SSLError if it’s unable to verify the certificate

Sample Solution:

Python Code:

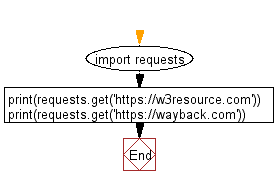

import requests

print(requests.get('https://w3resource.com'))

print(requests.get('https://wayback.com'))

Sample Output:

<Response [200]>

Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/urllib3/connectionpool.py", line 600, in urlopen

chunked=chunked)

File "/usr/local/lib/python3.6/dist-packages/urllib3/connectionpool.py", line 343, in _make_request

self._validate_conn(conn)

File "/usr/local/lib/python3.6/dist-packages/urllib3/connectionpool.py", line 839, in _validate_conn

conn.connect()

File "/usr/local/lib/python3.6/dist-packages/urllib3/connection.py", line 364, in connect

_match_hostname(cert, self.assert_hostname or server_hostname)

File "/usr/local/lib/python3.6/dist-packages/urllib3/connection.py", line 374, in _match_hostname

match_hostname(cert, asserted_hostname)

File "/usr/lib/python3.6/ssl.py", line 327, in match_hostname

% (hostname, ', '.join(map(repr, dnsnames))))

ssl.CertificateError: hostname 'wayback.com' doesn't match either of '*.prod.iad2.secureserver.net', 'prod.iad2.secureserver.net'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/requests/adapters.py", line 449, in send

timeout=timeout

File "/usr/local/lib/python3.6/dist-packages/urllib3/connectionpool.py", line 638, in urlopen

_stacktrace=sys.exc_info()[2])

File "/usr/local/lib/python3.6/dist-packages/urllib3/util/retry.py", line 398, in increment

raise MaxRetryError(_pool, url, error or ResponseError(cause))

urllib3.exceptions.MaxRetryError: HTTPSConnectionPool(host='wayback.com', port=443): Max retries exceeded with url: / (Caused by SSLError(CertificateError("hostname 'wayback.com' doesn't match either of '*.prod.iad2.secureserver.net', 'prod.iad2.secureserver.net'",),))

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/tmp/sessions/d2a193285f0c778a/main.py", line 3, in <module>

print(requests.get('https://wayback.com'))

File "/usr/local/lib/python3.6/dist-packages/requests/api.py", line 75, in get

return request('get', url, params=params, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/requests/api.py", line 60, in request

return session.request(method=method, url=url, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/requests/sessions.py", line 533, in request

resp = self.send(prep, **send_kwargs)

File "/usr/local/lib/python3.6/dist-packages/requests/sessions.py", line 646, in send

r = adapter.send(request, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/requests/adapters.py", line 514, in send

raise SSLError(e, request=request)

requests.exceptions.SSLError: HTTPSConnectionPool(host='wayback.com', port=443): Max retries exceeded with url: / (Caused by SSLError(CertificateError("hostname 'wayback.com' doesn't match either of '*.prod.iad2.secureserver.net', 'prod.iad2.secureserver.net'",),))

Flowchart:

Python Code Editor:

Have another way to solve this solution? Contribute your code (and comments) through Disqus.

What is the difficulty level of this exercise?